A low code coverage indicates that code is not well tested and therefore unsafe to work with. Unfortunately, a high code coverage does not give certainty that the tests are well written. Mutation testing promises to give us some of that certainty, by checking how well our tests hold up to changes in code behavior.

To follow along with this article, I encourage you to use the example project. The following commit provides the blank slate we need:

What is mutation testing?

Originally proposed by Richard Lipton in 1971, mutation testing is a technique used to evaluate the quality of software tests. Among others, it is used by some teams at Thoughtworks and Google.

In the simplest of terms, it is the act of automatically modifying existing code in small ways, then checking if our tests will fail. If a code modification does not cause at least one test to fail, that means we have a gap in our test suite or our tests are not written well enough (as they might be missing assertions).

Types of modifications applied to the code are called mutators. A modified version of the code is called a mutant. If a previously passing test fails when executed against it, that’s called killing the mutant. Test suites are measured by the percentage of mutants that they kill.

Adding Pitest to a Maven Junit 5 project

To add Pitest to your project, add the following to your build plugins in the POM.xml file:

<build>

<plugins>

<!-- https://mvnrepository.com/artifact/org.pitest/pitest-maven -->

<plugin>

<groupId>org.pitest</groupId>

<artifactId>pitest-maven</artifactId>

<version>1.8.0</version>

<dependencies>

<!-- https://mvnrepository.com/artifact/org.pitest/pitest-junit5-plugin -->

<dependency>

<groupId>org.pitest</groupId>

<artifactId>pitest-junit5-plugin</artifactId>

<version>0.16</version>

</dependency>

</dependencies>

</plugin>

<build>

<plugins>

This is the most basic configuration of Pitest, with support for Junit 5. However, the plugin is highly configurable so make sure to take a look at the documentation if you need something more specialized.

The default mutators which are going to run are:

- CONDITIONALS_BOUNDARY – replaces the relational operators

<,<=,>,>= - EMPTY_RETURNS – replaces return values with an ‘empty’ value for that type (e.g. empty strings, empty Optionals, zero for integers)

- FALSE_RETURNS – replaces primitive and boxed boolean return values with false

- TRUE_RETURNS – replaces primitive and boxed boolean return values with true

- NULL_RETURNS – replaces return values with null (unless annotated with

NotNullor mutable by EMPTY_RETURNS) - INCREMENTS – replaces increments (

++) with decrements (--) and vice versa - INVERT_NEGS – inverts negation of integer and floating point numbers (e.g.

-1to1) - MATH – replaces binary arithmetic operations with another operation (e.g.

+to-) - NEGATE_CONDITIONALS – negates conditionals (e.g.

==to!=) - PRIMITIVE_RETURNS – replaces primitive return values with

0(unless they already return zero) - VOID_METHOD_CALS – removes method calls to void methods

All available mutators are listed and described on Pitest’s Mutator Overview page.

While powerful, mutation testing is still computationally expensive. If better performance is needed, consider scaling the number of threads used by Pitest:

<plugin>

<groupId>org.pitest</groupId>

<artifactId>pitest-maven</artifactId>

<!-- ... -->

<configuration>

<threads>2</threads>

</configuration>

</plugin>

Additionally, it might make sense to exclude some classes or test classes (like integration tests) from the analysis, depending on your situation.

To generate a Pitest report, run the following command:

mvn clean test-compile org.pitest:pitest-maven:mutationCoverage

The generated report will become available under ./target/pit-reports.

How to interpret the results

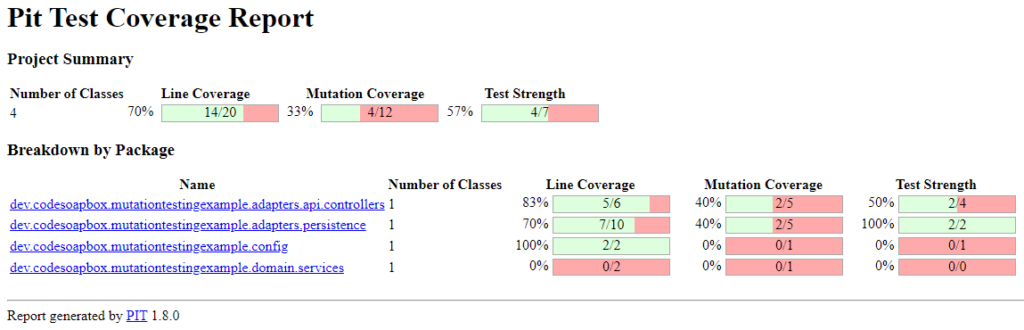

The main page of the Pit Test Coverage report is going to show 3 metrics – line coverage, mutation coverage and test strength.

Line coverage

Line coverage is the measure of how many lines are actually covered by tests.

In this example, 14 out of 20 lines were covered by tests. This tells us that the code is not sufficiently tested, but is not groundbreaking information if the project already utilized some sort of code coverage tracking.

Mutation coverage

Mutation coverage measures how many mutants were killed out of all mutants created.

Here, 12 mutants were created but only 4 were killed.

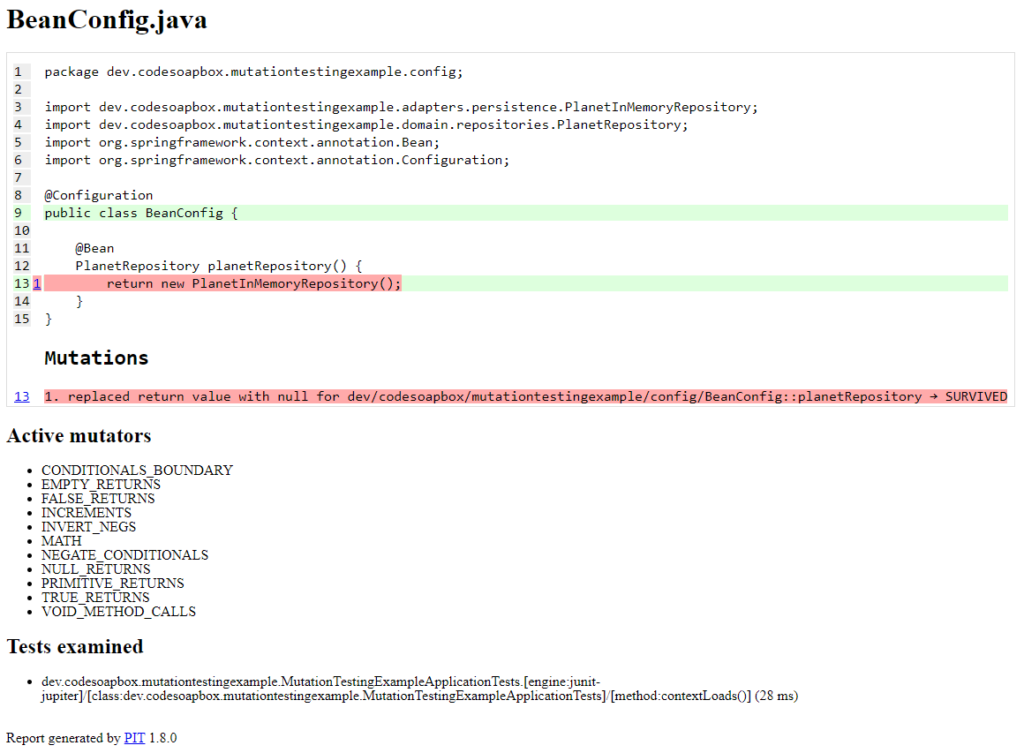

One of those mutants is connected with the ...config.BeanConfig class. Navigating to it in the report is going to provide more information:

The mutation happened on line 13, where the NULL_RETURNS mutator modified the code to return null. The only test which exercises this code is MutationTestingExampleApplicationTests::contextLoads which lacks any assertions. Thus, the test passed and the mutant was not killed.

Another surviving mutant was in ...domain.services.DeadCodeService – it wasn’t killed, as there was no test which could fail.

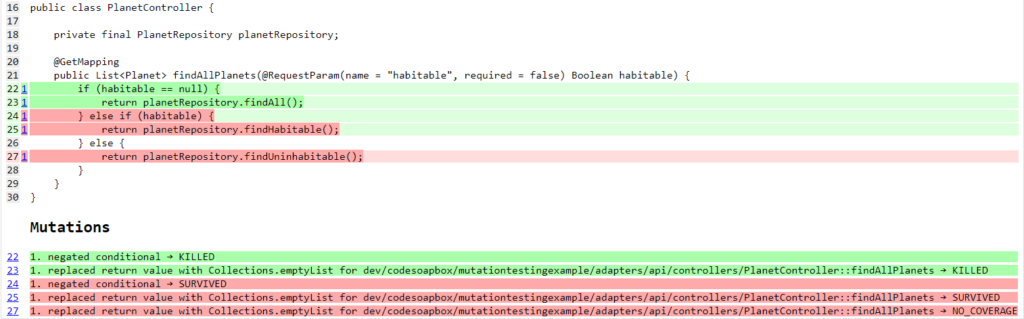

A few other mutants survived for the PlanetController class.

Two of them did because the PlanetControllerIT::shouldFindAllHabitablePlanets test is badly written and does not have an assertion on the response. Thus, the mutant returning an empty list from findAllPlanets (on line 25) did not fail any tests. The mutant modifying line 27 survived due to lack of test coverage.

// Excerpt from PlanetControllerIT.class:

@Test

void shouldFindAllHabitablePlanets() throws Exception {

when(planetRepository.findHabitable())

.thenReturn(singletonList(new Planet("Omicron Persei 8", false)));

// This is a weak test as it does not verify the response

mockMvc.perform(get("/planets?habitable=true"))

.andDo(print())

.andExpect(status().isOk());

}

Test strength

Test strength measures how many mutants were killed out of all mutants for which there was test coverage. This means that mutants that survive due to lack of coverage will not count towards this statistic.

As an example, a mutant was created that returns an empty list when PlanetController is asked to find uninhabitable planets. However, as there are no tests for this behavior, this mutant gets removed from the count. That is why the final value for this metric for ...api.controllers is 2 out of 4 mutants, not 2 out of 5 (as is the case with the mutation coverage metric).

This was suggested as a better metric than mutation coverage to use for validating builds (e.g. only allowing Merge Requests to be merged if the metric is high enough).

What’s next?

By now you should have a general understanding of what mutation testing is, how it helps us be more confident when working with code and how to use Pitest to generate a coverage report. However, there is much more to explore in terms of understanding the intricacies of this approach and ways of configuring it.

I highly recommend reading the Pitest FAQ, as well as its Maven Quickstart Guide for more information on how to fine-tune your particular configuration.

For a more detailed, battle-tested look at using Pitest in practice, there’s the Don’t let your code dry article on its blog.

Finally, they also provide a step-by-step guide on how to integrate Pitest with your Pull Request pipeline. Bear in mind, however, that their proposed solution uses Arcmutate plugins which are not free for commercial use. Pricing for those plugins can be found on their website.

The work done in this post is contained in the commit 97d3a860a6ad60ca6d9fc5a39106a2359421303b.

Photo by Braňo

Be First to Comment